AI started, as new technologies often do, like a toy.

On March 25th, 2023, an image of Pope Francis wearing the, uh, papal puffer parka began to make the rounds on Twttr.

Photos showed the 86-year-old sitting pontiff lookin certifiably blessed in a bespoke Moncler jacket. After it went viral, the image was revealed to be fake. It was made using Midjourney, a popular AI art creation company accessible via Discord.

Newsrooms quickly dubbed the whole affair “the first real mass-level AI misinformation case.” But luckily, the Pope’s drippy outfit — while biblically dope and holy — was not super serious markets-moving stuff.

However, the arrest of a former president and leading political candidate is a slightly bigger deal.

Around the same time the Pope pic was circulating, a separate deepfake of Donald Trump getting arrested was also making the rounds.

The images quickly circulated online, and while they managed to fool many, they were quickly debunked as fake. Yet again, the real-world impact was (thankfully) minimal.

Today — May 22, 2023– marks the third major case of an AI-generated image fooling the collective internet. And this time, it inflicted real damage.

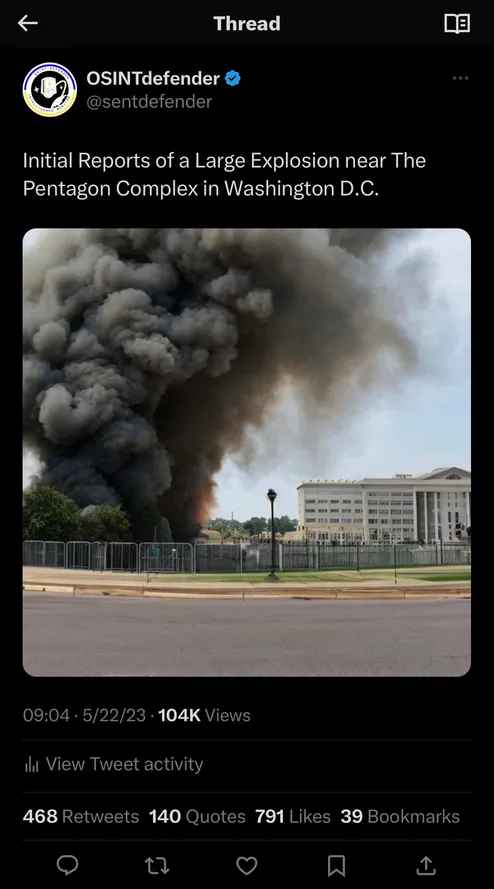

This morning, a deepfake of an explosion at the U.S. Pentagon surfaced. Multiple online news sources began reporting on it, including widely followed OSINT accounts — “open source intelligence” — like OSINTdefender.

Amid the chaos, the S&P 500 fell 30 points within minutes, wiping out ~$250 billion worth of market cap value.

Markets quickly rebounded once the image was confirmed fake.

Upon closer analysis, online sleuths noted that the light post appears in the foreground and background: a telltale sign of AI.

Exactly one hour and 30 minutes after the initial tweet from OSINTdefenser, the Pentagon itself issued a statement.

At this point, it remains unclear who created the image and why. But it really doesn’t matter. This most recent episode underscores a problem we’ll all face in the years to come: how to differentiate between real vs fake and human vs AI.

Some believe that regulation is the answer. They say we must regulate companies like OpenAI and others to ensure they’re responsible shepherds of this world-altering technology.

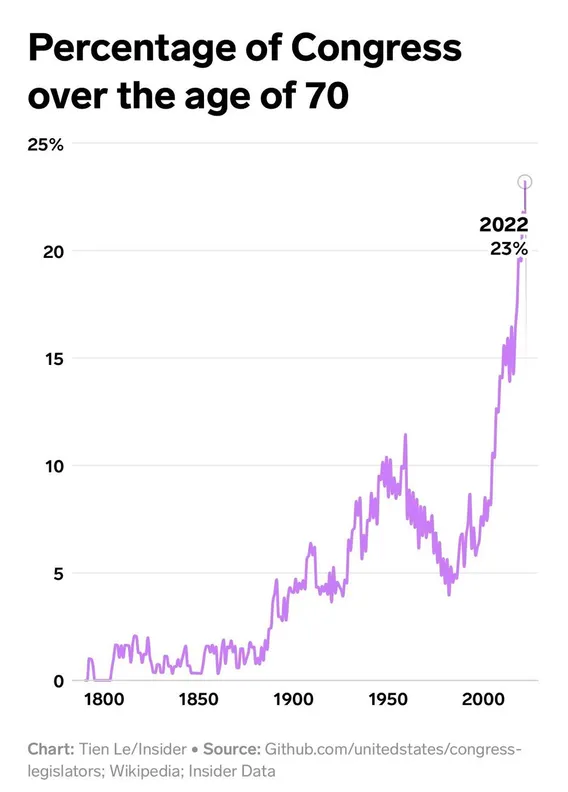

However, even if we do, by some minor miracle, manage to “regulate AI,” this would merely pass the responsibility of oversight from technologists to politicians and bureaucrats. And in an age where companies are seriously discussing the advent of artificial superintelligence (ASI)…

…it seems unwise to leave the most important decisions surrounding its deployment to a bunch of septuagenarians.

Yes, AI needs guardrails. But those guardrails need to be nimble and adaptive along the exponential.

What these “new solutions” entail is anyone’s guess, but many in the crypto space believe blockchains will play a key role. Blockchains are really good at attribution. This isn’t great privacy-wise, but it offers an eerily perfect foil to AI’s negative externalities, like the artificial picture of an explosion at the Pentagon today. It’s not hard to imagine a day when all digital media is stamped by a private key that authenticates its legitimacy and offers some “proof-of-human” guarantee.

At its core, AI makes us question what it means to be human, so it’d be kinda poetic if it ends up being the ultimate catalyst behind crypto’s long-awaited “digital identity” narrative. As OpenAI and its competitors power full steam ahead in non-race conditions (wink, wink), more than ever, we need our motley crew of internet money-loving, pfp-clad, cypherpunks to buidl the necessary crypto-defined guardrails to ensure AI brings forth utopia, not dystopia.

I, for one, will be rooting for the builders in this space: you may be our only hope.